Tool Evaluation Card Sort

Problem

Moz historically made assumptions that has created friction for our users which led to high churn and lower long-term retention. The value of the tool became buried and users often did not find the core values in the product when they needed them. Based on previous research, we previously identified several workflows based on lifetime value segments. This segmentation and analysis still was not getting to the core of surfacing more value to users in the critical first 3 months of their subscription.

This type of project is nothing like anything that has been approached at Moz. Identifying the gap in how tools were presented, developing and validating the problem statements, creating the business case (and pitching it), setting goals and milestones, and receiving vague directional input from a continuously changing leadership team created additional complications to address and overcome for the success of this project.

Role: Team manager, lead researcher, project ownerOpportunity

The opportunity in this project is reimagining how the product is presented to users and how it provides value to maintain market-share and reduce the churn. To be successful going forward the product should be less of a collection of tools and prescriptive workflows, but a semi-configurable workspace that addresses the needs and goals of the people using them.

Within a user-aware framework there should be frictionless growth opportunities for both the core value of the tools and for add-on features that create an even more sticky ecosystem that allows users to excel.

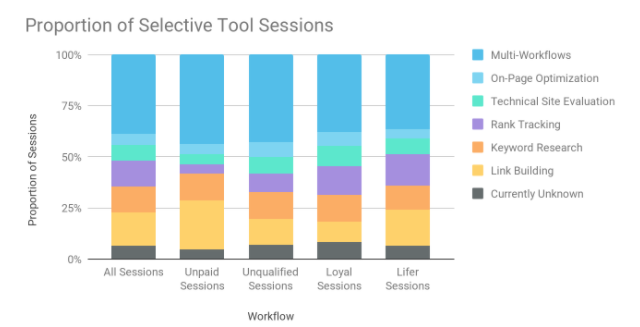

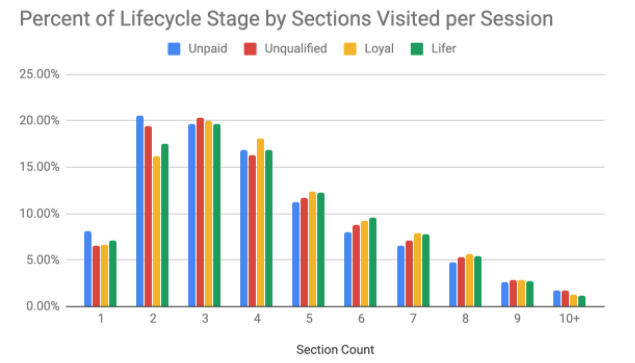

Examples of workflow data gathered from previous research efforts Thesis

Users of the Moz Pro tool will naturally group the tools based on the output they are seeking. Users within similar persona cohorts will group tools in similar ways, and the groupings may vary from cohort to cohort.

Questions this activity should answer

Do users interact with the tools in similar ways and do those ways align with each tools intended purpose?

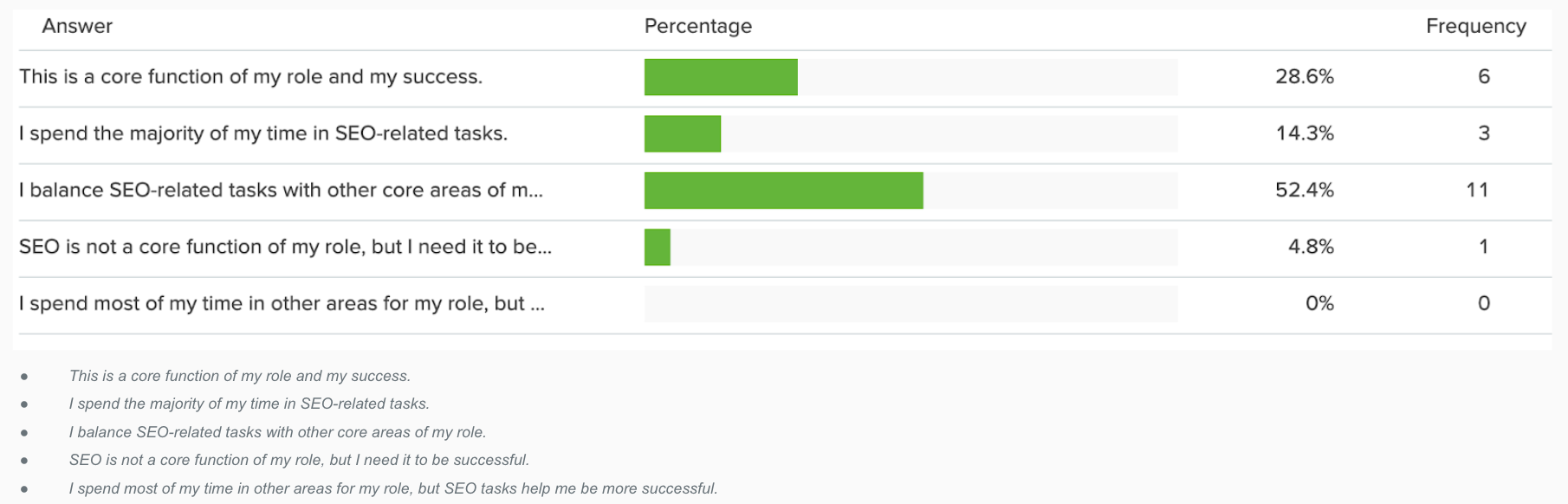

How does user skill level impact how they interact with our tools/perceived tool value?

What tool are users consistently missing?

What our commonalities amongst either the tools that are missed or the users that are missing them?

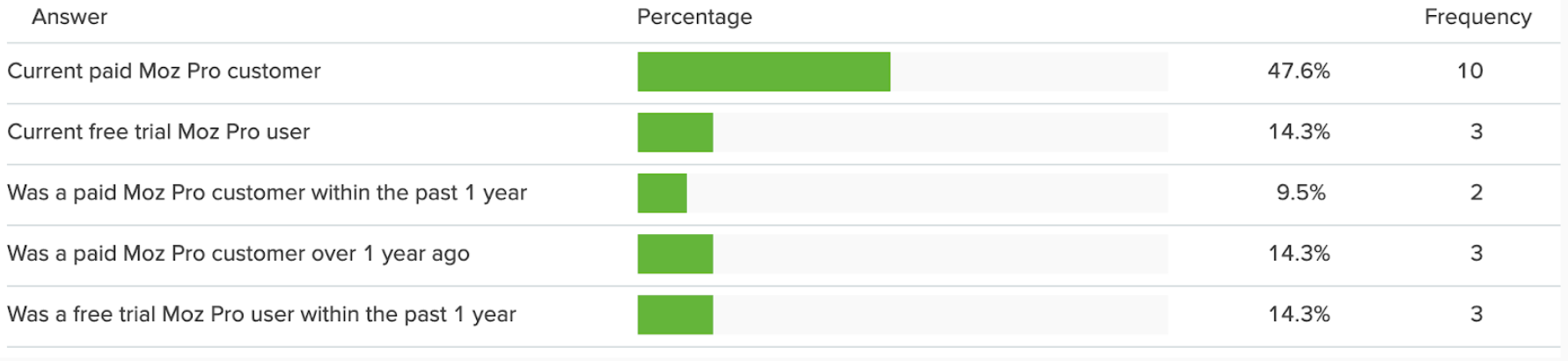

The participant landscape

Over the course of about a week a cross-section of our user research database was emailed to gather 20-25 participants for this study. Choosing to do this as a closed unmoderated card sort required the directions to be smoke-tested before we sent this out to ensure clarity in participation. This gave us confidence that we would have meaningful data responses.

Research MethoD

Closed, unmoderated card sort Tool: Optimal Workshop

This activity asked users to sort the areas of the current Moz Pro tool into 4 different categories. The categories were pre-defined, as were the cards and the participants could not create additional cards or categories. Cards could not be left unsorted.

Categories:

Reporting | Tracking: These are tasks that I preform when I need to generate charts, graphs, reports, or other types of information that I can send others or use for future tracking of my work.

Not Used In My Workflow: These are areas of the tool that I do not use.

Research | Analysis: These are tasks that I preform where I need to research my company/site, competitors, keywords, or other tasks that are exploratory in nature.

Action | Strategy: There are tasks that I preform when I am looking to make a change, correct an error, add sites/keywords/competitors, or other activities like those.

Findings

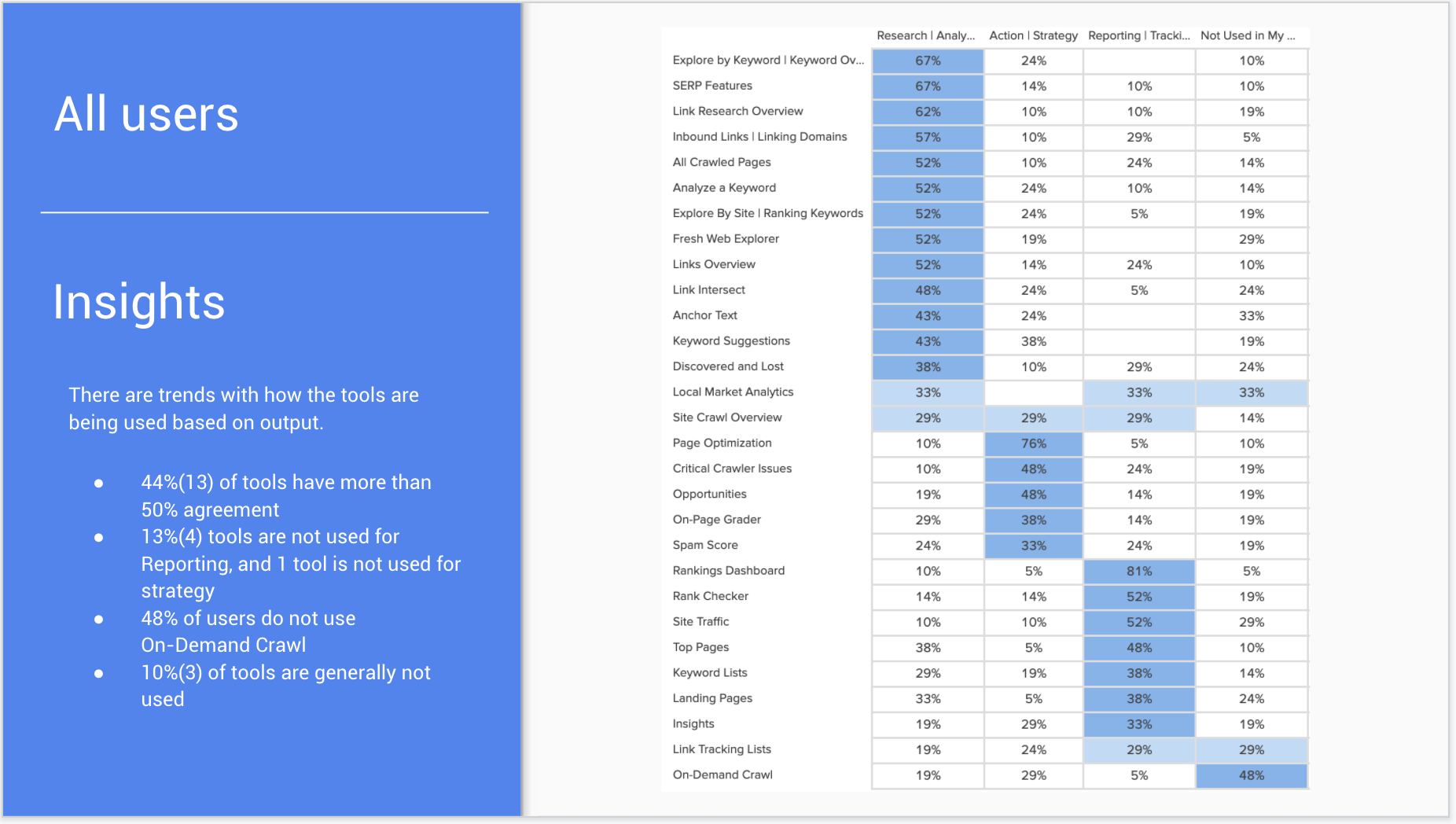

Looking at all users

Statistically significant results showed users followed common interaction patterns. While there was some divergence as we segmented out the participants, the data shows that there is trend groupings on the popular placements matrix.

Trends by persona

Filtering down the data by personas surfaced some interesting details about how these specific cohorts of people interacted with the areas of the tool. In some cases there was 100% agreement among participants on the intent of the tool.

Similarities arose in expertise

Filtering the data by expertise showed that in many cases certain levels of proficiency were directly correlated to persona-based roles. This finding led us to look more deeply at the product design personas for opportunities to be more clear on how we were presenting them.

Outcome

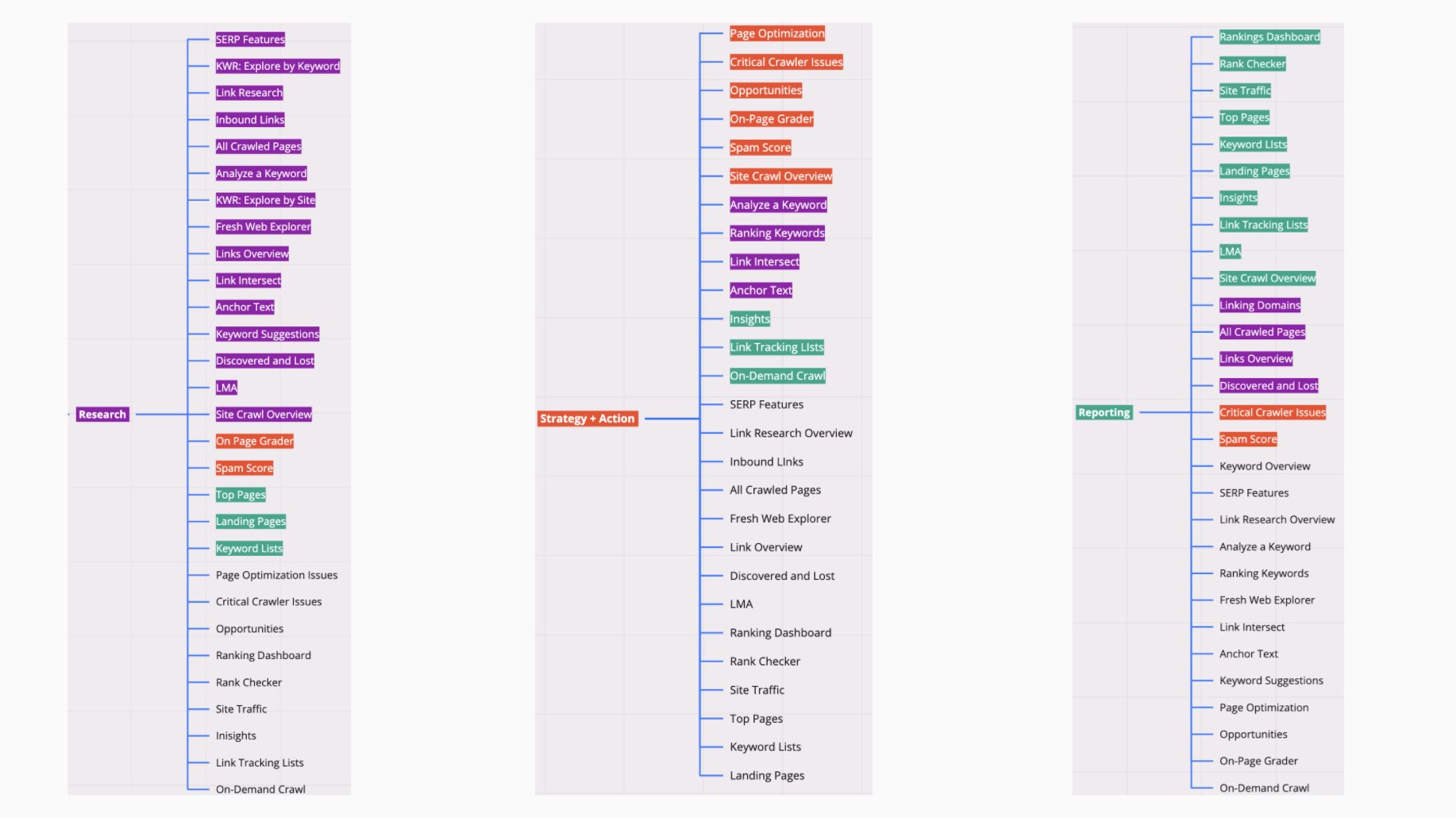

After the analysis was done and weighted based on cohort(persona) and volume of user-type in our tool the following groupings were created. These findings green-lit effors for further testing among a wider base of Moz Pro users.

Tools that are match-color with their category are tools that had 50% or more agreement that their intent was primarily for that category. Unmatched colors are 25%-49% agreement. And un-colored tools are 24% or less agreement.

Value

This project was the corner stone for the validation for creating a new product framework and re-assessing the level of effort we are putting into supporting legacy tools.

Next Steps

Following this project, there have been 2 more deep-dive research efforts that have spun off these findings. The BI team has begun efforts to implement more granular event tracking to gather use-based data around product engagement which will ultimately make way for a continually refining use-based persona map.